World Heart Federation (WHF) estimated that 20.5 million people died from cardiovascular diseases in 2023, representing 34% of all global deaths. Out of this, 72.1% were unaware of their hypertension status. Heart sound analysis is a non-invasive technique that can be used to facilitate early identification of cardiac abnormalities such as murmurs.

Our project aims to employ machine learning for the analysis of heart sounds collected via devices such as a digital stethoscope, to facilitate the early identification of cardiac abnormalities and murmurs. We aim to expand this feature to smartphones, where people can easily check their heart health and determine when medical advice is necessary.

We used the publicly available PhysioNet 2022 challenge dataset, which contains 5000+ heart sound recordings, labelled for murmur presence and normality. Previous studies, such as McDonald et al. (2022) - the winners of the aforementioned challenge, use various classification techniques including CNNs and RNNs. None, however, apply the classification to classify Mobile phone heart recordings, or modify the model to account for the same.

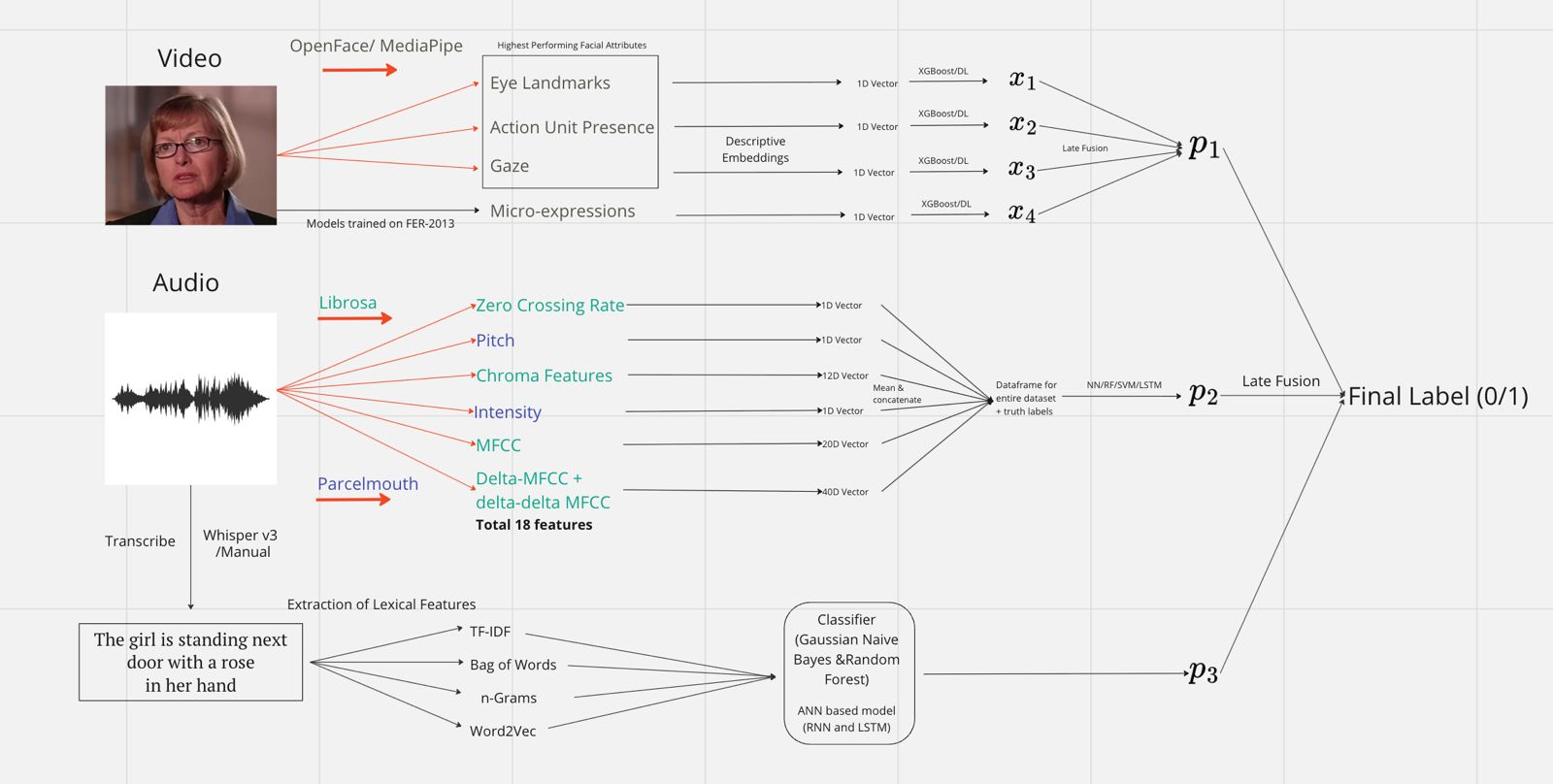

After preprocessing the data by clipping, downsampling, and filtering using a butterworth filter, we used the extracted MFCC images of the sound as input to our model. After trying both CNNs and RNN (LSTM) for classification, we observed better performance with the CNN model. This model accounted for the temporal variation in the sound, and was robust enough to classify the sub-par mobile recordings.

Performance metrics - Cost and Weighted Accuracy, defined by the dataset creators Physionet, were used to evaluate our model. These were designed primarily to prevent false negatives (non-diagnosis of CVD). Our model would rank 1st amongst all challenge participants with a low cost of 8388, and a weighted accuracy of 0.716.